Building API Platforms With Scale

I’ve spent good part of last 15 years designing and building APIs for various large, complex, distributed systems. And I’ve even written couple books about it - Microservice Architecture and Microservices Up and Running

Despite my many years in the API space and my clear commitment to it, the most significant lesson I’ve learned is this: APIs, in themselves, don’t matter. They are simply interfaces to platforms. What truly matters is our ability to build scalable platforms, as that’s where the real delivery of tangible business value resides. Allow me to explain.

First, let’s clarify what we mean by “a platform” and “scale”. In the context of this discussion, APIs are individual actions such as making a comment, updating a customer record, or processing a payment. Platforms, on the other hand, should deliver holistic business value. They consist of a carefully curated list of affordances: APIs, events, and operating models. For instance, an accounting platform should provide a comprehensive solution for all the accounting needs of its customers. A customer platform should manage the entire lifecycle of customers, and a commenting platform should oversee all aspects of commenting, including moderation and fraud protection.

Similarly, the term “scale” is used in a very specific context in this post. Here, scalability doesn’t merely refer to handling high read traffic, large data volumes, or intense rate of transactions. While a robust platform should be equipped to manage these, by “scalability”, we primarily mean designing a platform in such a universally applicable manner that it painlessly evolves with time and its users discover various new ways to leverage it—ways that the platform’s creators might never have envisioned. In essence, we’re discussing the use-case scalability of platforms. If we were to liken a platform to a startup business, we’d be interested in understanding how we could scale up its reach beyond its original purpose.

Storytime

To illustrate my perspective on APIs and Platforms, I want to tell you a story. A story that starts in the heart of a large enterprise, and in the middle of it is an experienced, dedicated, and brilliant CIO. We will call her Michelle. Michelle is a highly motivated technology executive. She is driven by her passion for efficiency through technological innovation. And she has what she would describe as healthy amount of contempt for any kind of wasteful activities.

On the day in question, Michelle was reviewing organizational-level performance metrics with her leadership team, to identify any wasteful trends. Going through various numbers and dashboards, her heart was starting to sink. It became glaringly apparent that numerous teams within her organization were repeatedly reinventing the wheel. As a seasoned executive, Michelle wasn’t prone to impulsive reactions. However, the data was undeniable — it was a call to action. A renewed emphasis on reuse was imperative. With resolute promptness, she decreed that the central architecture team would henceforth review all major new API endeavors. Their mandate was to pinpoint redundant efforts and grant “permissions to build” only when absolutely warranted.

Typically, these permissions would favor platform projects that encompassed a comprehensive set of unique, cohesively interlinked APIs, providing overarching business value. For example, the accounting division would be responsible for singular API suite to cater to all accounting-related needs. In a similar vein, the Enterprise Customer Platform would serve as the central hub for all API interfaces handling customer data.

Total Cost of Reuse (TCR)

As Michelle was explaining her new mandate and taking feedback on the implementation details from her team, she noticed that a key leader on her team was silent and had a concerned look on his face. John had been Michelle’s trusted lieutenant for years. He was usually outspoken, and the uncharacteristic silence was troubling. Michelle asked John what was on his mind.

– I am worried about “total cost of reuse in time”, responded John.

“Total Cost of Reuse in Time?”, nobody in the room had ever heard such concept so Michelle asked John to explain what he meant.

John pointed out that when focusing on reuse, there’s a common pitfall: failing to assess the long-term costs and benefits. All too often, we zero in on the immediate advantages of reuse at the point of decision, overlooking the long-term implications of both reuse and centralization. Imagine a scenario where multiple departments in a large company require a particular functionality. Let’s use an online retailer as an example. Suppose all the business units decide they want to launch loyalty and rewards programs. Recognizing this as a need across many divisions, one might conclude that it’s an ideal candidate for reuse. Consequently, a centralized implementation might seem like the best route. At first glance, a centralized approach does appear beneficial. Rather than creating reward functions for retail customers, then again for small business customers, and yet again for large commercial clients, a single, centralized solution seems efficient. It promises to save time and resources. Celebrations all around! Surely everyone’s in for a big holiday bonus, right?

However, let’s project ourselves 3 to 6 months into the future. Suppose the commercial business unit wishes to modify their rewards program or introduce new features because they have found a very financially lucrative opportunity that such modifications would enable. Since the rewards system is centrally managed and universally shared, the following steps become necessary:

- The commercial business unit cannot make the changes on their own. They need to align with the central Rewards organization’s product roadmap to schedule the desired changes.

- If the proposed change isn’t backwards-compatible, the central Rewards organization must communicate with all other business units utilizing the Rewards system to coordinate the changes, ensuring no disruption.

This mandatory coordination can considerably delay the commercial business unit’s desired changes. It might extend the waiting period from mere weeks, had they handled it independently, to potentially several months, as they must now sync with both the central Rewards team and numerous other departments.

Over time, as changes accumulate, the initial advantages of system reuse can wane. Each subsequent modification necessitates a coordination effort, causing delays and potentially increasing costs. With a high enough rate of change, the centralized Rewards system might shift from being perceived as a collective asset to a shared burden.

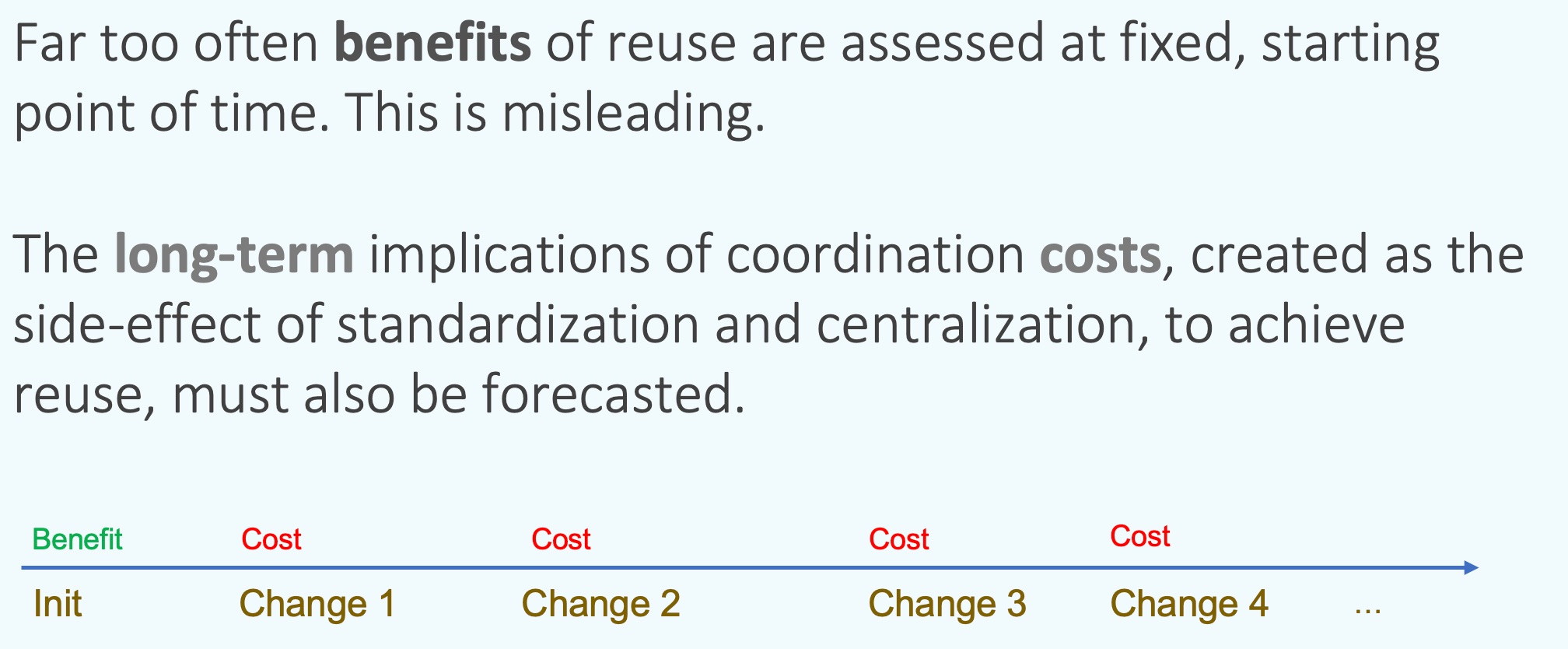

Assessing the benefits of reuse and centralization only at a project’s outset can be misleading. Everything might seem rosy initially, but over an extended timeframe, the complications of change coordination can considerably erode or even negate the initial advantages. This underscores the importance of evaluating the Total Cost of Reuse (TCR) over the long term, rather than merely at the beginning

Does acknowledging TCR mean that we should avoid shared services or the creation of central platforms entirely? Not at all. It simply suggests that we need to be keenly aware of the long-term costs of reuse and regularly evaluate a platform throughout its lifecycle. Grasping the implications of TCR helps in devising strategies to manage and mitigate its associated costs

Expected Frequency of Change

In particular, the expected frequency of change stands out as a vital consideration. While TCR suggests that subsequent modifications can render centralization exorbitantly expensive, if we centralize a function that inherently changes infrequently, the later alteration costs might remain lower than the initial benefits of reuse. Hence, the entire initiative could still be worthwhile. This is why we insist that any functionality centralized in a shared platform shouldn’t be expected to undergo frequent changes. Capabilities that evolve slowly are suitable for centralization and reuse. Conversely, those that change often tend to be cost-prohibitive in the long run, even if they address shared needs across many stakeholders

One crucial aspect to consider is the frequency of change. TCR underscores that subsequent modifications can render centralization excessively costly. Thus, centralizing functions that are inherently stable and less subject to change can be advantageous. In these scenarios, the long-term costs of updates might be offset by the upfront benefits of reuse, justifying the effort. Consequently, any functionality integrated into a shared platform should ideally exhibit stability and predictability. Capabilities that evolve at a slower pace are the best fit for centralization and reuse. Conversely, frequently changing capabilities can become financially untenable over time, even if they address the shared concerns of multiple stakeholders

A prime example of focusing on evergreen capabilities is seen in Public Cloud platforms like AWS, Google Cloud, and Azure. They prioritize the development of features that are highly focused and are resistant to need for frequent change. Take AWS’s Simple Storage Service (S3) as a case in point. I’m continually amazed by S3’s journey. Launched approximately 15 years ago primarily for hosting static web resources like CSS files and images, its basic API interface is now employed to construct vast enterprise data lakes, fueling intensive machine-learning projects. Fifteen years ago, it was inconceivable that S3 would serve such advanced purposes—many of these modern applications for S3 simply didn’t exist back then. This epitomizes true platform scalability: developing a platform that users adapt for purposes vastly beyond the original vision of its creators. Designing platforms with such level of evolvability ensures their enduring relevance, and S3’s evolution offers invaluable lessons for other platforms.

Three Pillars of Scalable API Platforms

The principles of closely monitoring TCR and steering clear of frequently-changing capabilities have distilled into three specific guidelines that we’ve successfully integrated into our practices:

1. Platforms shouldn’t act as regulators of common standards for applications built upon them

In many large corporations, when teams build platforms (formerly known as “shared enterprise systems”), there’s often an inclination to sidestep the diverse needs of consumers. This is done by directing the platform teams to establish rigid standards on elements like data models and process workflows. This approach rarely succeeds. Firstly, achieving consensus on standardization is such an arduous process that years can be spent refining the standard—a laborious and frequently thankless endeavor. Such delays hinder the timely release of functional, valuable products. Additionally, data model standards are notoriously fragile. Often, by the time a consensus on a standard is achieved, it’s already on the brink of obsolescence.

Contrast this with the S3 platform as a counterexample. S3 doesn’t prescribe a specific data model for file storage and refrains from setting rigid standards. The platform didn’t get bogged down in protracted standard negotiations among its vast user base. Furthermore, it certainly doesn’t dictate the methodology for storing files.

For those aspiring to develop a successful platform, acting as the custodian of data and standards is the last role one should assume. Instead, primary objective ought to be the introduction of universally appealing features. Look at S3’s approach: its creators didn’t convene every web developer to hammer out a standard. They simply rolled out an intuitive solution that resonated with most users. Such universally acceptable features are what platform builders should aim to incorporate. To cater to the unique requirements of platform users, it’s more effective to offer extensibility points within the platform rather than attempting to accommodate every conceivable feature in a “one-size-fits-all” manner.

2. Platforms should refrain from orchestrating calls to other platforms on behalf of consumers

The inspiration for this principle can be traced back to the Unix Philosophy of modular software development. Here, the platform (Unix) offers basic utilities, allowing users to freely combine (pipe) them in endless ways. While the platform develops these tools, it makes minimal assumptions about their subsequent combinations. Instead, it focuses on providing standardized inputs and outputs for seamless orchestration. In the realm of platforms, this translates to supplying functionalities as a set of “primitive” (meaning basic and foundational, not unsophisticated) APIs that the consumer can organize, without the platform itself handling any orchestration.

It’s essential to sidestep orchestration, primarily because it implies a predetermined workflow. Workflows, by nature, are fragile and subject to constant changes. The TCR principle warns us to steer clear of elements prone to frequent change. Consider, for instance, an order fulfillment platform. It could either implement various steps of the fulfillment process as APIs, allowing you to construct a sequence that suits your needs, or it might fully implement an end-to-end fulfillment workflow. With the latter, any deviation or customization you require transforms into a coordinated change challenge. While the end-to-end approach might seem like a convenient, ready-made solution initially, it offers only short-lived advantages. In the long term, it becomes a significant contributor to change-associated costs.

3. Platforms must refrain from implementing consumer-specific logic.

Building platforms often comes with the allure of trying to be everything to everyone. It’s not uncommon to want to over-deliver, proving the platform’s worth and attempting to encompass more than its intended scope. However, it’s vital to establish a clear boundary between the platform’s core functionalities and the responsibilities of its users, who craft end-user products atop this foundation. Platforms must resist the ‘crowd-pleasing’ pitfall. While initially promising a fully ‘turnkey’ solution might garner interest, becoming an impediment to adaptability in the long run will only sour the experience for both the platform developers and their users.

Consider the difference between S3 and services like Dropbox or Google Drive. Each is successful within its domain, but platforms like Google Drive or Dropbox aren’t typically used for applications like data lakes or fueling machine learning models. Why? Because Dropbox and Google Drive are tailored for specific user experiences, rendering them less adaptable for diverse applications. Despite probably leveraging technology akin to S3’s, their consumer-specific logic limits scalability. In contrast, S3’s success stems from its minimalistic capabilities and its decision not to presuppose its utilization, allowing it unparalleled scalability across a plethora of use-cases.

In conclusion

As we wrap up, I’d like to leave you with this one thought. The era of a wild forest of APIs within major corporations has come to an end. The future lies in purpose-built platforms that are self-service and delivered as APIs and Streams. The enduring success of these platforms hinges on their architects’ profound understanding of the Total Cost of Reuse In Time and their adeptness in selecting stable, focused, slow-evolving capabilities to represent strong identity that they define for their platforms.